In today’s article I want to debunk what I believe are some myths and misconceptions about testing, based on a broad experience of writing automated tests for back-end applications for nearly two decades.

I will start by explaining key concepts about automated testing that will serve as a foundation, before discussing these myths.

If you know the basics of testing you may want to jump straight to the Breaking Myths section.

Testing Basics

Automated Testing

When I started my career, most testing that we did was manual. This was a time-consuming, error-prone and expensive process.

Fortunately, we have learnt from this and now most of our testing is automated which allows us to run our tests anytime we want, as many times as we want.

Test Coverage

When executing automated tests we can generate metrics, like test coverage.

Test coverage basically measures how many lines of code are executed by at least one test. 100% coverage means that all lines of code are executed at least once, and 50% coverage that half your lines of code are never executed.

Types of Tests

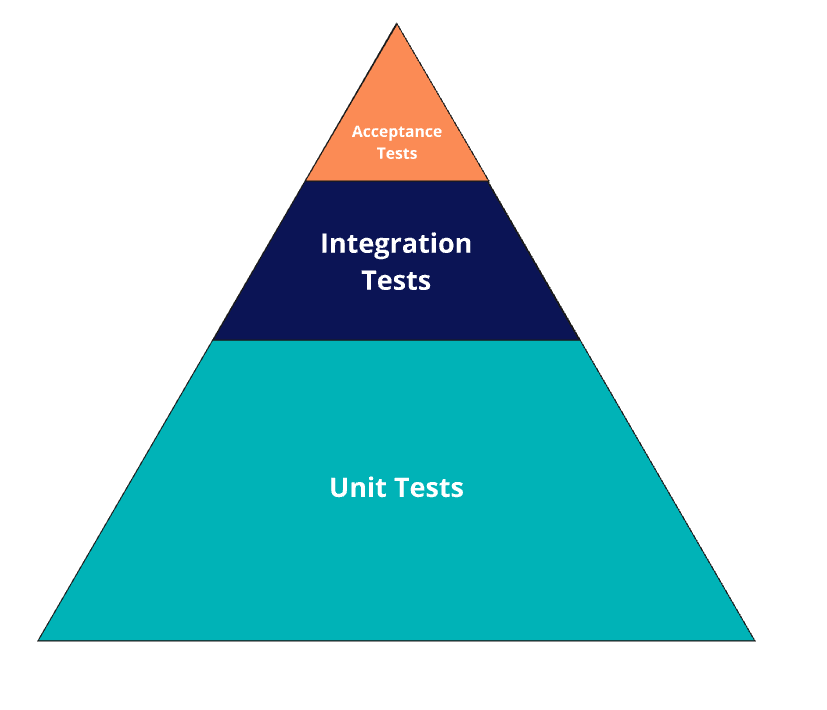

Nowadays there are different types of tests, but we can summarise them in three categories: Unit, Integration and Acceptance tests.

Unit tests are focused on testing core business logic. They test a small piece of code, so when they fail it’s easy to identify the issue. They are very quick because they do not rely on external dependencies (DB’s, disk, network, etc.).

Integration tests are key to identify interoperability problems. They test how code works with other parts of the system, like DB’s, disk, network, etc. They are slower than unit tests and it takes more time to identify the root problem.

Acceptance tests are designed to validate features from the end-user perspective, so they are also called end-to-end tests. They are more expensive to write, maintain and run that the other tests, but also play a key role in detecting bugs.

Testing Pyramid

The concept of Testing Pyramid was introduced in 2009 by Mike Cohn, and it proposes that we should have more unit tests than integration tests, and more integration tests than acceptance tests.

The rationale is that a healthy test base should heavily rely on unit tests because they are cheaper to write, execute and maintain. Next in line come integration tests, so we should add some of these, but no more than unit tests. Lastly, acceptance tests are also important, but we should have less acceptance tests than integration tests.

A popular Google blog suggests a 70/20/10 split, although it’s very clear in that those values are just guidelines. Putting those numbers together, the pyramid looks something like this.

Breaking Myths

Myth 1: test coverage is a hard goal

Myth 2: test pyramid ratios are a hard goal

Myths 1 and 2 refer to believing that these testing metrics are goals in themselves, which is not true.

Metrics are measures that help us assess, compare and track something using numbers. Their aim is to help us understand reality so we can make decisions.

One example of a metric that is used as a goal are SLOs (technically the metrics are the SLIs, but that’s another discussion). SLOs are usually goals that are critical to the success of a product, service or business.

However, having tests is not a business need. Tests are tools that help us assess the quality of our products, but we can still serve our customers if test coverage is low, even zero (I know a real case of this).

Ideally, we’ll have automated tests that are well thought and useful, and as many of them as possible and practicable. However, aiming for a specific test coverage or proportion of tests is just arbitrary.

Instead, these metrics are extremely useful to monitor how test coverage or pyramid rates change over time. If there’s a dramatic change over a period of time, that should certainly raise some alerts!

When I joined a new company, I quickly noticed that a repository had only 28% of automated tests as unit tests, and 54% as integration tests. Just seeing that we had twice as many integration tests as unit tests was a clear signal that we needed to dig deeper and understand the context and any possible actions.

Myth 3: test coverage leads to poor-quality tests

A few times I have heard complaints about poorly written automated tests, indicating that the cause is that teams are too focused on meeting a test coverage threshold.

I believe that this is a fallacy.

Consider the case of a repository where the code is poorly written, bad designs are evident and anti-patterns are in abundance. Most people would naturally question the development process, the quality of the team, the code review process, etc.

Why is it then that when automated tests suffer from the same issues the culprit is always the test coverage?

Although I already explained why testing coverage should not be a goal, I’ve never seen any proof that links bad tests to this goal. It is my belief that this mythical relationship is mentioned so frequently because it’s a lot easier than tackling the real problems.

Just like with any other piece of code, the reasons for bad tests are usually software developers not knowing how to write tests, that code reviewers don’t actually review tests when they are added, or that reviewers don’t know how to write tests either, and so on.

These problems can be addressed by upskilling ourselves and others, and making improvements to our development flow. But we can only do that if we are open to see the real cause of our issues.

Myth 4: The testing pyramid does not apply to all tech stack

In all these years, I am still to know a back-end language or framework that prevents adding unit tests. But on one occasion, some software developers argued that they did not need to follow the testing pyramid advice, because they wrote code in Ruby on Rails.

They explained to me that due to how rails ties Views, Controllers and Models, it was difficult to write unit tests, which are the basis of the testing pyramid.

Although Rails does add some complexity to the task, I still believe that the main problem in these situations is that the business logic is too coupled with Rails. In turn, the solution is actually quite simple: business logic should be written in its own classes or modules, that can be tested independently of the framework.

Following this approach would not only allow for more unit tests in the code base, but to also improve the overall quality of the code.

Myth 5: Test doubles should not be used because they add risk

Another concerns that I have heard is about the risks of writing unit tests using doubles, instead of relying on integration tests that can verify the full flow.

Although that statement is partially true, it’s also misleading, because it ignores the fact that almost everything we do as software developers is about risk and how to properly manage it.

Most teams that have healthy tests suites follow a testing pyramid, and this is not by chance. We add lots of unit tests to mitigate the risk of humans writing code, and we add integration tests to mitigate the risks of the shortcomings of unit tests.

We should not refuse to write unit tests because they add risk, instead, we must ask ourselves how we can best manage this risk.

Myth 6: unit tests can interact directly with the database.

Quality unit tests should be free of dependencies, to make them as fast as possible, while still ensuring that business logic is tested. Database connections, no matter how fast, still take valuable time that could be used to execute more tests.

To serve as a concrete example for one of my teams, I once reduced the execution time of a test by half by using some hardcoded IDs instead of getting these IDs from the database (and I may have been able to improve that further if I had dived deeper into the test dependencies).

In some applications, it may be really hard to see how some code can be tested with unit tests without a database. Sometimes the answer may be to decouple some logic, others to use test doubles, or maybe something else.

From experience writing countless tests, the main reason why a unit test is hard to write is because the code was not written with testing in mind. In these cases, TDD is our best ally, but that is also a topic for another day.

Invariably, there will be cases when the only way of testing what we need is by having third party dependencies, like a database connection. When this happens, it means that what we need is an integration test and not a unit test, and that is fine as long as we call it an integration test.

Myths are very easy to believe, spread as fast as a virus, and it takes a lot of effort and thought to see through them.

Everyone can be affected by these myths, but junior developers are the most vulnerable, because they naturally look up to their experienced counterparts to learn the good and bad practices, and that learning will usually last for a long time.

I have tried to be as clear as possible with my mental thought, so you can judge my opinions by their own merit. And if you disagree, that is fine: I did once too, but the path I have traveled has allowed me to see things from a different perspective. If instead you agree: congratulations! Accepting that the problem may be us and not the tools takes courage, and is not something that everyone is willing to acknowledge.

Happy testing!

José Miguel

Share if you find this content useful, and Follow me on LinkedIn to be notified of new articles.